How to Install Kubernetes (k8s) v1.21.0 on CentOS 7.x ?

Kubernetes (k8s) is an open source system for managing containerized applications across multiple hosts, providing basic mechanisms for deployment, maintenance, and scaling of applications. The open source project is hosted by the Cloud Native Computing Foundation (CNCF).

It groups containers that make up an application into logical units for easy management and discovery. Kubernetes builds upon 15 years of experience of running production workloads at Google, combined with best-of-breed ideas and practices from the community.

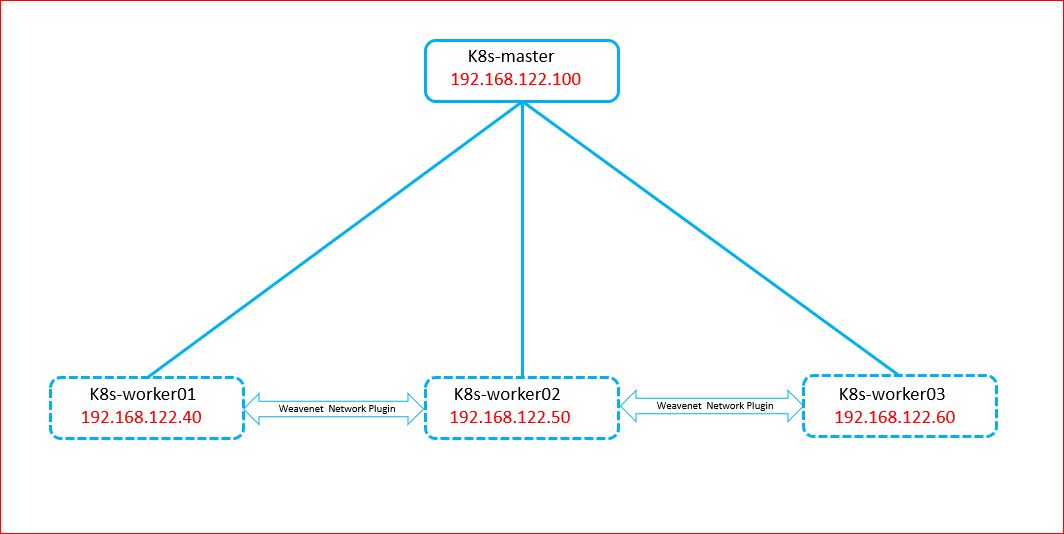

Kubernetes is also known as k8s as there are 8 letters between 'k' and 's'. It follows a server client architecture where the server is known as master and the clients are known as workers. In this article we will use one master and three worker nodes to deploy the kubernetes cluster. From the master node we have to manage the cluster using two tools known as 'kubeadm' and 'kubectl'.

Here is a simple architecture I have used to prepare the kubernetes cluster.

Kubernetes Master node Components:

a. kube-apiserver: Its the front-end of the Kubernetes control plane and exposes the Kubernetes API. Its designed to scale horizontally.

b. etcd: Consistent and highly-available key value store used as Kubernetes’ backing store for all cluster data.c. kube-scheduler: It watches newly created pods that have no node assigned, and selects a node for them to run on.

d. kube-controller-manager: Logically, each controller is a separate process, but to reduce complexity, they are all compiled into a single binary and run in a single process. These controllers include 'node controller', 'replication controller', 'endpoints controller' and 'service account & token controllers'.

Kubernetes Worker node Components:

a. kubelet: An agent that runs on each node in the cluster. It makes sure that containers are running in a pod.

b. kube-proxy: It enables the Kubernetes service abstraction by maintaining network rules on the host and performing connection forwarding.

c. Container runtime: The container runtime is the software that is responsible for running containers. Kubernetes supports several runtimes: Docker, rkt, runc and any OCI runtime-spec implementation. Here I am using docker.

[*] For more information refer the upstream documentation of kubernetes components.

Installation Steps for Kubernetes Master node:

NOTE: Make sure swap is not configured in the Master nodes, else kubelet service won't start.

[root@k8s-master ~]# free -m

total used free shared buff/cache available

Mem: 3950 727 2420 10 802 2976

Swap: 0 0 0

1. Install 'br_netfilter' module as it is required to enable transparent masquerading and to facilitate VxLAN traffic for communication between Kubernetes pods across the cluster.

[root@k8s-master ~]# lsmod|grep br_netfilter

[root@k8s-master ~]# modprobe br_netfilter

[root@k8s-master ~]# lsmod|grep br_netfilter

br_netfilter 22256 0

bridge 146976 1 br_netfilter

2. Kubernetes requires that packets traversing a network bridge are processed by iptables for filtering and for port forwarding. To achieve this, tunable parameters in the kernel bridge module are automatically set when the kubeadm package is installed. Hence make sure that its enabled:

[root@k8s-master ~]# cat /proc/sys/net/bridge/bridge-nf-call-iptables

1

[root@k8s-master ~]# sysctl -a | egrep -i bridge-nf-call-iptables

net.bridge.bridge-nf-call-iptables = 1

NOTE: In CentOS 7.7 and higher the above steps may not work to load the br_netfilter module. So following steps will help:

[root@k8s-master ~]# echo br_netfilter > /etc/modules-load.d/br_netfilter.conf

[root@k8s-master ~]# echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

[root@k8s-master ~]# sysctl --system

3. Disable SELinux and Firewalld:

[root@k8s-master ~]# sed -i --follow-symlinks 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux

[root@k8s-master ~]# systemctl stop firewalld

[root@k8s-master ~]# systemctl disable firewalld

[root@k8s-master ~]# systemctl mask firewalld

4. If DNS is not configured for the FQDNs of master and worker nodes, do the '/etc/hosts' file mapping:

[root@k8s-master ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.122.100 k8s-master.example.com k8s-master

192.168.122.40 k8s-worker01.example.com k8s-worker01

192.168.122.50 k8s-worker02.example.com k8s-worker02

192.168.122.60 k8s-worker03.example.com k8s-worker03

[root@k8s-master ~]# cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg

https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

NOTE: Before moving to next step, reboot the node once.

6. Install kubeadm docker packages:

[root@k8s-master ~]# yum install kubeadm docker -y

7. Start and enable kubectl and docker service

[root@k8s-master ~]# systemctl enable docker && systemctl restart docker

[root@k8s-master ~]# systemctl enable kubelet && systemctl restart kubelet

8. Initialize kubernetes setup using the below command:

[root@k8s-master ~]# kubeadm init

To start the cluster:

# mkdir -p $HOME/.kube

# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# chown $(id -u):$(id -g) $HOME/.kube/config

9. Check the nodes and pods:

NOTE: It may not be fully ready without the pod network. So follow step [10] and deploy the Weave Net based Container Network Interface(CNI)

[root@k8s-master ~]# kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"

serviceaccount/weave-net created

clusterrole.rbac.authorization.k8s.io/weave-net created

clusterrolebinding.rbac.authorization.k8s.io/weave-net created

role.rbac.authorization.k8s.io/weave-net created

rolebinding.rbac.authorization.k8s.io/weave-net created

daemonset.extensions/weave-net created

Now run the same commands to verify the status:

Installation steps on each kubernetes worker node:

NOTE: Make sure swap is not configured in the Worker nodes, else Kubelet service won't start.

[root@k8s-workerX ~]# free -m

total used free shared buff/cache available

Mem: 3950 727 2420 10 802 2976

Swap: 0 0 0

1. Install 'br_netfilter' module as it is required to enable transparent masquerading and to facilitate VxLAN traffic for communication between Kubernetes pods across the cluster.

[root@k8s-workerX ~]# lsmod|grep br_netfilter

[root@k8s-workerX ~]# modprobe br_netfilter

[root@k8s-workerX ~]# lsmod|grep br_netfilter

br_netfilter 22256 0

bridge 146976 1 br_netfilter

2. Kubernetes requires that packets traversing a network bridge are processed by iptables for filtering and for port forwarding. To achieve this, tunable parameters in the kernel bridge module are automatically set when the kubeadm package is installed. Hence make sure that its enabled:

[root@k8s-workerX ~]# cat /proc/sys/net/bridge/bridge-nf-call-iptables

1

[root@k8s-workerX ~]# sysctl -a | egrep -i bridge-nf-call-iptables

net.bridge.bridge-nf-call-iptables = 1

NOTE: In CentOS 7.7 and higher the above steps may not work to load the br_netfilter module. So following steps will help:

[root@k8s-workerX ~]# echo br_netfilter > /etc/modules-load.d/br_netfilter.conf

[root@k8s-workerX ~]# echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

[root@k8s-workerX ~]# sysctl --system

3. Disable SELinux and Firewalld:

[root@k8s-workerX ~]# sed -i --follow-symlinks 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux

[root@k8s-workerX ~]# systemctl stop firewalld

[root@k8s-workerX ~]# systemctl disable firewalld

[root@k8s-workerX ~]# systemctl mask firewalld

4. If DNS is not configured for the FQDNs of master and worker nodes, do the '/etc/hosts' file mapping:

[root@k8s-workerX ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.122.100 k8s-master.example.com k8s-master

192.168.122.40 k8s-worker01.example.com k8s-worker01

192.168.122.50 k8s-worker02.example.com k8s-worker02

192.168.122.60 k8s-worker03.example.com k8s-worker03

[root@k8s-workerX ~]# cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg

https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

NOTE: Before moving further reboot the worker nodes once.

6. Install kubeadm docker packages:

[root@k8s-workerX ~]# yum install kubeadm docker -y

7. Start and enable kubectl and docker service

[root@k8s-workerX ~]# systemctl enable docker && systemctl restart docker

[root@k8s-workerX ~]# systemctl enable kubelet && systemctl restart kubelet

8. Join the worker nodes with master by executing the below command:

[root@k8s-workerX ~]# kubeadm join 192.168.122.100:6443 --token 6ngl1v.av6wos1vnuoyntts --discovery-token-ca-cert-hash sha256:9385d420a3318532dc8de8cc198e6fb2dc16c67806e2949ebe96e4a8a4126537

9. Then go to kubernetes master and check the status of all the 3 nodes:

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 12m v1.21.0

k8s-worker01 NotReady <none> 11s v1.21.0

k8s-worker02 NotReady <none> 15s v1.21.0

k8s-worker03 NotReady <none> 45s v1.21.0

Wait for some time ........

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 12m v1.21.0

k8s-worker01 Ready <none> 65s v1.21.0

k8s-worker02 Ready <none> 69s v1.21.0

k8s-worker03 Ready <none> 99s v1.21.0

To apply label, use the below command on the master node:

# kubectl label nodes k8s-worker01 kubernetes.io/role=worker1

# kubectl label nodes k8s-worker02 kubernetes.io/role=worker2

# kubectl label nodes k8s-worker03 kubernetes.io/role=worker3

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 14m v1.21.0

k8s-worker01 Ready worker1 3m30s v1.21.0

k8s-worker02 Ready worker2 3m19s v1.21.0

k8s-worker03 Ready worker3 3m14s v1.21.0

k8s-master Ready control-plane,master 14m v1.21.0

k8s-worker01 Ready worker1 3m30s v1.21.0

k8s-worker02 Ready worker2 3m19s v1.21.0

k8s-worker03 Ready worker3 3m14s v1.21.0

As we can see all the three worker nodes are mapped to master, the cluster is ready to use.

Comments

Post a Comment